Technology

What is technology?

- Heidegger introduces two theories of technology: The instrumental and the anthropological:

The instrumental theory of technology defines technologies as tools or instruments that human beings use as means to their ends. The anthropological theory of technology defines technologies as parts of distinctively human activities. The combined theory (“the instrumental and anthropological definition of technology”) represents technologies as means to ends within human activities.

— This is Technology Ethics, Sven Nyholm

- Post-phenomenology looks at how technology shapes the way we experience the world. It identifies two key parts, that are influenced by technology:

- The way we perceive or experience the world and even ourselves.

- What we are able to do and how we are able to do it.

Because this theory argues that technology influences the way we value things around us, it claims that technology is not value-neutral. This position is a refined version of the anthropological theory of technology.

- Joanna Bryson's normative version of the instrumental theory of technology: All technologies should be things. In Kantian terms: They should be regarded as means, and never as ends in themselves.

Value Alignment

Values can be good or bad, and instrumental or not. The same goes for value alignment.

Quoting Iason Gabriel, Nyholm states that there are two problems with value alignment: The technical challenge of implementing the value alignment (the how), and the normative problem of choosing the right values (the what) to align to. Part of the first challenge is that it is very difficult to precisely define what we want. As humans, we have learned to understand others based on many different cues and can handle grained requests. Machines, however, need precision. Famously, King Midas was granted his wish that everything he touched would turn into gold. This sounds nice, until you want to eat something.

Value alignment is not only important for AI. Today, it is already a highly relevant issue. Algorithms (in social media in example) can be misaligned with the users' value. I think this is pretty much the case. They maximize engagement, and are hardly steerable. As a user, you are ouutsourcing your decision to discover content to a black box.

Control and Personal Autonomy

Is control as a value only instrumental or not? Chapter 5 has many good points on control.

Nyholm makes three general remarks about control:

- it is multi-dimensional

- it has degrees of robustness

- it has degrees of directness

The broad scheme here is that technology represents a trade-off: It helps us do more things easier, but at the cost of losing continuous control. This can be a problem, but does not need to be. Value alignment is part of the solution.

To value control does not necessarily mean to try to maximize it:

5.71 When we philosophize more generally about control and its value, it is also worth reflecting on a claim made by the American philosopher T.M. Scanlon. In his 1998 book What We Owe to Each Other, Scanlon claims that to understand the value of something is to know how to properly value it. He thinks, for example, that when it comes to some values, the proper way to value them might not necessarily be to try to maximize them, but perhaps rather to cherish or to try to protect existing instances of them.

5.72 Consider friendship as an example. To properly understand the value of friendship, it might be thought, is not to try to maximize the number of friends one has, but instead to cultivate a smaller number of close friendships.

— This is Technology Ethics, Sven Nyholm

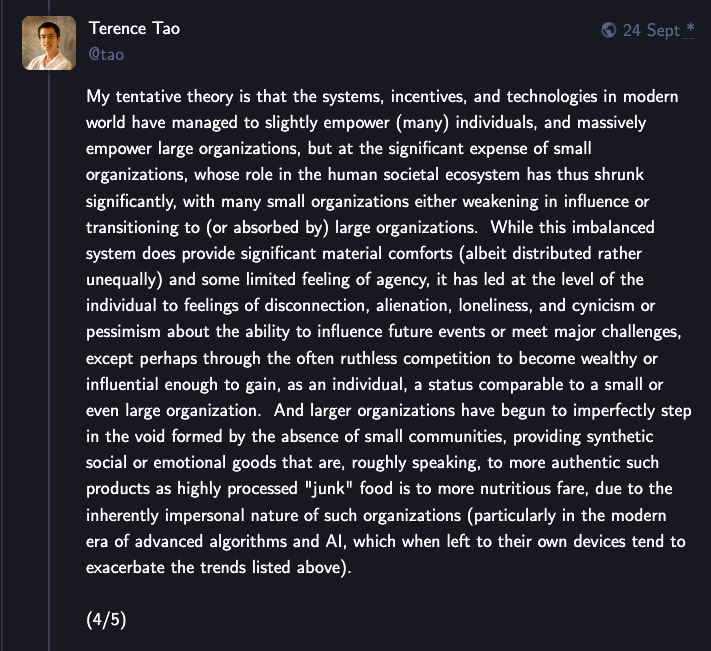

An interesting, related post by mathematician Terrence Tao below. Tao talks about empowerment, which is similar enough to control I think. For this purpose, his point is, that as individuals have gained material comfort, large organizations have gained control, while smaller groups have lost control.